“The only way to discover the limits of the possible is to go beyond them into the impossible.” – Arthur C. Clarke.

This quote elegantly sets the stage for Google’s Gemini AI, a technological marvel that ventures boldly into the realm of the ‘impossible.’ Gemini AI marks the first step towards Artificial General Intelligence, a state in which AI can perform all the tasks that humans can. As we delve into its applications and multimodal nature, the ingenuity of Gemini AI becomes increasingly apparent.

Gemini AI is the world’s foremost Multimodal Large Language Model (MLLM) – boasting unparalleled proficiency in interpreting inputs across various languages and modalities. This advanced AI seamlessly processes these diverse inputs, delivering tailored outputs for an array of complex tasks.

Unveiling the Multimodal Nature of Gemini AI

Gemini AI represents a vanguard in the realm of artificial intelligence, a testament to the wonders of deep learning and the quest for artificial general intelligence (AGI). At its core, this multimodal AI model is a microcosm of Google’s vision for a more holistic, perceptive, adaptable, and responsible form of AI. This model’s ability to understand and process text, images, code, sound, and video is not merely an incremental improvement but a substantial leap toward the realization of AGI—a type of intelligence that can understand, learn, and apply knowledge in an integrative manner akin to human intelligence.

The Wonders of Gemini AI Through a Deep Learning Lens

From a deep learning perspective, the multimodal nature of Gemini AI is particularly fascinating. Traditional AI models typically specialize in one domain, such as natural language processing for text or convolutional neural networks for images. However, Gemini AI transcends this specialization by integrating multiple modalities into a cohesive learning framework. It utilizes a sophisticated form of representation learning, where the model learns to identify and utilize abstract representations or features from the data it processes, irrespective of the modality.

Futuristic look of Multimodal AI

The deep learning algorithms powering Gemini AI are designed to identify patterns and make associations between disparate data types. For

example, it can link a text to a corresponding image or video, understanding the context and nuances a single-modality model would miss. This is achieved through advanced neural network architectures (fundamental building blocks of AI) that can handle the complexity and dimensionality of multimodal data, extracting and merging relevant features to generate coherent and contextually appropriate responses.

Image representing features of Gemini

Towards Artificial General Intelligence

The pursuit of AGI has been likened to the holy grail of AI research. AGI requires a system capable of understanding and reasoning across a broad range of cognitive tasks that humans can perform. Gemini AI’s multimodal capabilities represent a critical step in this direction. By processing and synthesizing information across various sensory inputs, Gemini AI begins to mimic the integrative cognitive functions of the human brain.

The concept of ‘neural-symbolic integration,’ where deep learning (neural) models incorporate and manipulate symbolic data, is central to

AGI. Gemini AI’s ability to generate code from textual descriptions or translate spoken language into action illustrates this integration. It’s not just learning patterns; it’s understanding and applying concepts innovatively.

The Implications of a Multimodal AGI

The implications of Gemini AI’s multimodal proficiency are profound. In healthcare, it could mean a system that listens to a patient’s verbal symptoms, processes their written medical history, analyzes their imaging scans, and provides a diagnosis and treatment plan. Autonomous

vehicles could integrate visual data, audio cues, and text-based information, such as traffic updates, to navigate safely.

The types of modalities that Gemini AI can process.

Furthermore, Gemini AI’s deep learning framework is designed with scalability in mind. As the model learns from more data and more types of interactions, its ability to generalize and adapt to new tasks could grow exponentially. This scalability is essential for AGI, which must be capable of continuous learning and adaptation.

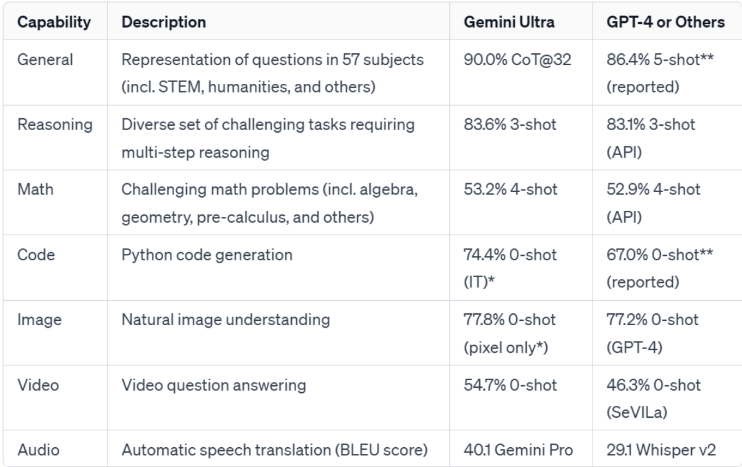

Performance of Benchmarks – Making it the best!

Here, we present the statistical data that showcases the experiment conducted on Gemini AI and its performance in those experiments.

The table presented above compares the Gemini AI model and other state-of-the-art MLLMs. The data clearly illustrates that Gemini AI outperforms its counterparts in every aspect, showcasing its dominance in the field.

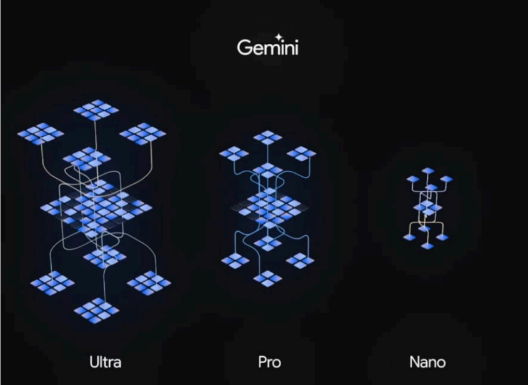

The Threefold Structure: Ultra, Pro, Nano

Gemini AI is structured into three versions, each tailored to specific user needs and contexts:

Gemini Ultra: This is the most powerful model, ideal for data centers and enterprises dealing with complex tasks. It excels in deep data analytics, advanced AI research, and extensive machine learning tasks.

Gemini Pro: Balancing high capability with efficiency, this model suits businesses and AI services requiring versatile AI applications. It powers Google’s AI services like Bard and enhances customer service bots.

Gemini Nano: Designed for mobile solutions, this efficient model can run offline on Android devices, making it ideal for on-device tasks and mobile app integrations.

The image represents three different versions of Gemini AI.

Power of Gemini and its environment understanding

Gemini shows human-level understanding and reasoning ability. This has been depicted through the following examples.

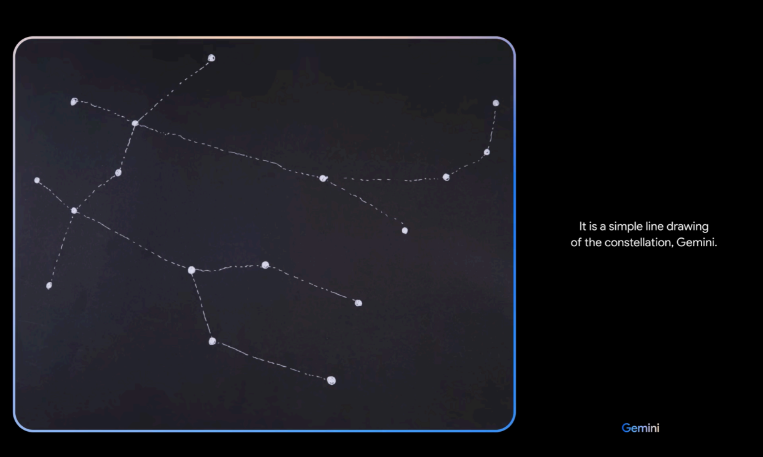

Excellent Zero Shot recognition performance

The image here illustrates the performance of the Gemini AI model when analyzing an input image. Where most AI models might identify the image as a collection of lines and dots, the Gemini AI demonstrates its advanced capabilities by accurately recognizing it as a drawing of the Gemini constellation. This distinction is evident from the response of the Gemini model displayed on the right side of the image.

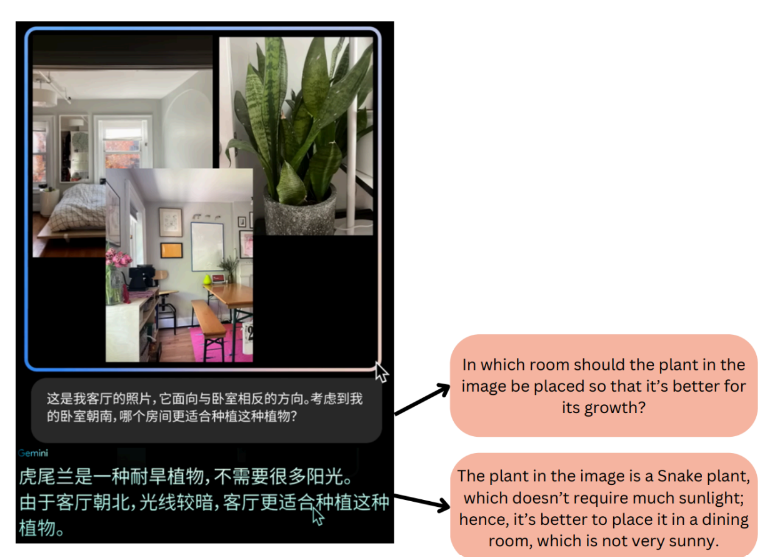

Multilingual Understanding

The following example shows the multilingual and multimodal performance of the Gemini model. Here, the Gemini model is prompted with Chinese text and three images. The first image is of a sunny bedroom, the second is of a plant, and the third is the dining room image.

This example showcases the model’s multilingual understanding and demonstrates its capability to handle real-world tasks effectively.

This example showcases the model’s multilingual understanding and demonstrates its capability to handle real-world tasks effectively.

Applications that Transform Realities

Healthcare: Gemini AI revolutionizes patient care by efficiently analyzing medical images, patient histories, and current research, leading to more accurate diagnoses and personalized treatment plans. Its prowess in processing vast medical data enhances treatment effectiveness.

Financial Services: Gemini AI enhances risk management and fraud detection by analyzing transaction patterns, market trends, and economic reports. This capability allows financial institutions to make more informed decisions and offer better services to their customers.

Educational Support: In the field of education, Gemini AI aids in creating personalized learning experiences. By processing educational content, student feedback, and performance data, it can tailor educational materials to individual learning styles, enhancing the overall

educational experience.

Creative Industries: In creative domains, such as digital art and music, Gemini AI’s ability to process and generate images and sounds paves the way for new forms of artistic expression. Artists and musicians can collaborate with Gemini AI to explore new creative horizons.

Language Translation and Voice Recognition: An excellent tool for real-time translation and voice recognition. This enhances communication across language barriers and makes technology more accessible to people worldwide.

Responsible, Safe, and Scalable: The Ethical Backbone of Gemini AI

In the contemporary landscape of AI, where power and potential are paramount, the discourse often shifts to a crucial aspect: responsibility. Google’s Gemini AI, a beacon of technological advancement, is built upon a foundation of safety and ethics.

Gemini AI has undergone novel research to identify and mitigate potential risk areas. Google Research’s adversarial testing techniques* have been employed to uncover and

address critical safety issues preemptively. This proactive stance in safety evaluation sets a new standard in AI development, ensuring that Gemini AI is powerful, secure, and reliable.

Google employs tools like Real Toxicity Prompts to ensure content safety in Gemini AI’s training, analyzing 100,000 expert-developed prompts to align Gemini’s output with strict content policies and ethical standards.

The Vision and Future

In the grand tapestry of technological advancement, Google’s Gemini AI represents a pioneering leap, charting a course toward a future where artificial intelligence achieves human-like understanding across a multitude of modalities. With its ability to interpret text, code, audio, images, and video, Gemini AI is not merely a marvel of modern engineering; it is the harbinger of a new epoch where AI’s grasp of context and nuance mirrors the depth and breadth of human cognition.

At its core, Gemini AI is underpinned by a commitment to responsible innovation. It is the vanguard of a generation of AI that is safe, ethical, and scalable — a testament to Google’s dedication to advancing AI that is attuned to human values and societal well-being. As we peer into the possibilities of tomorrow, Gemini AI stands as a milestone in our quest for artificial general intelligence, promising a future where

technology’s potential is matched by its prudence and its utility is as widespread as its integrity. This is the vision of Gemini AI — a future where AI and humanity evolve in tandem, unlocking new frontiers with every step forward.

* Details can be found at the following link: https://blog.research.google/2023/11/responsible-ai-at-google-research_16.html

Reference

1. University of San Diego (2023) “Artificial Intelligence in Finance.” Online Degrees. Retrieved from https://onlinedegrees.sandiego.edu/artificial-intelligence-finance/

2. (2023, March 8) “Demystifying AI in Healthcare in India.” Forbes India. Retrieved from

https://www.forbesindia.com/article/isbinsight/demystifying-ai-in-healthcare-in-india/87547/1

3. Google AI Team (2023). “Google Gemini AI: A Step Forward in Responsible AI.” Blog.Google. Retrieved from

https://blog.google/technology/ai/google-gemini-ai/#responsibility-safety

4. Google (2023). “Testing Gemini: Understanding environments” YouTube.

Retrieved from https://www.youtube.com/watch?v=JPwU1FNhMOA

5. Google 2023) “Gemini: Google’s newest and most capable AI model” YouTube. Retrieved from https://www.youtube.com/watch?v=jV1vkHv4zq8&t=190s

6. “StyleTTS-2: Human-Level Text-to-Speech with Large Speech Language Models.” Unite.AI. Retrieved from

StyleTTS 2: Human-Level Text-to-Speech with Large Speech Language Models

Recent Comments